Habitat AI

For the experiments, the simulator will be Habitat AI (Manolis Savva, 2019). Which is one of the most popular simulators in the industry. It is known for its speed, its support for a variety of third party datasets, and for supporting develop one of the richest visual datasets named the Habitat Matterport Semantic Dataset (Karmesh Yadav, 2022 ). It is an open source project run by Meta’s Embodied AI division.

Figure: Habitat Synthetic Scenes Dataset (HSSD) (Mukul Khanna1, 2023)

Tasks Outlined in the Habitat 1.0 paper is the “Object Navigation” task, where the agent has to find an object in a room (Manolis Savva, 2019). The “re-arrange” task is outlined in the Habitat 2.0 paper where the agent needs to move objects to their proper location (Szot, 2022). Finally, the Habitat 3.0 explains the “Social Navigation: Find & Follow People” from section 4.1 of (Xavi Puig∗, 2023), which has the virtual agent follow a virtual human. Video demonstrations can be viewed on the Habitat 3.0 website (Meta-AI, 2023). Also note that Habitat 3.0 uses the new Habitat Synthetic Scenes Dataset (HSSD) (Mukul Khanna1, 2023) as seen in Figure.

BEVAIOR-1K

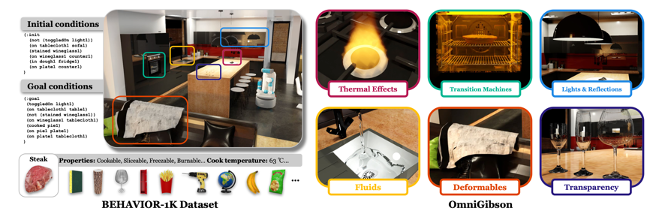

The BEVAIOR-1K simulator provides visually realistic environments for agents, and the environment supports interactions with over 1000 tasks to train on (Li, 2022). The variety of tasks and environment interactions are integral to training an intelligent agent. An image showcasing the environment prosperities can be seen in Figure 9, and more information can be found on the website (Lab, 2022).

Figure 9: Explanatory image of the BEHAVIOR 1K simulator.

ManiSkill2

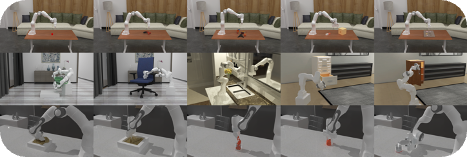

The ManiSkill2 simulator focuses on object manipulation using a robotic arm. It is a rich environment to train for a robotic arm to interact with objects and perform over 20 unique families of tasks (Gu, ManiSkill2: A Unified Benchmark for Generalizable Manipulation Skills , 2023). This includes identification, grasping, and placement of objects of a variety of textures, shapes, and sizes. An image of the environment can be seen in Figure 10.

Figure 10: ManiSkill2 Environment with a robotic arm doing tasks.

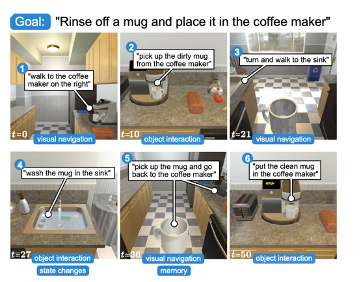

ALFRED

ALFRED (Action Learning From Realistic Environments and Directives) is a tool for developing and testing models that can follow natural language instructions and perform household tasks in a realistic 3D environment (Mohit Shridhar, 2019). An image of the environment can be seen in Figure 11.

Bonus

RH20T: A Comprehensive Robotic Dataset for Learning Diverse Skills in One-Shot